One of my first duties when I began as Digital Asset Manager was to select the systems that the team would use to access content. The team of 25+ video editors were working off a traditional server that was dropping connections multiple times a day, forcing the editors to pull projects onto their desktops to work on them, then push them back up to the server when it was convenient for them. Not only were resources vulnerable to loss from improper backup while on the desktops, what was being moved back to the server was often missing essential components. Also, the aggregate source material used in the video compilations was silo’d and inaccessible to other members of the video team, which was further complicated by an ad hoc folder structure being used by each editor.

A secondary issue that needed to be addressed with our architecture plan was the solution for our offsite data backups. Because the server being used was standard issue, so was the backup solution which was saving every bit of data generated in perpetuity, regardless of whether it was worth saving.

1 The first order of business was to establish a defined folder structure for each component of each project so that the location of the aggregate assets would be reliable. This was essential to get everyone on the same page as it would enable the automatic cataloging and metadata capture of our assets later.

2 I investigated our options on our server connection issues and determined that the correct answer was a server specifically designed for our use-case. After talking with people from similar teams in the UC system and even at the American Film Institute, we selected Facilis as our hardware choice. With Facilis, the user connections were stable and I was able to create each project as its own mountable volume with unique permissions. This enabled me to reduce vulnerabilities by limiting access to only those team members working on that specific project, and would allow us to more strategically utilize the 128TB of space on the server.

3 With the server in place and the work environment stable, I turned my attention to our DAM solution and storage choice for off-site backups, which went hand in hand. I exhaustively researched the DAMS and MAMS, comparing features, price points, and integrations available for storage. At that point I’d already started conversations with Backblaze and we felt that their affordable object storage model was the right choice for us. CatDV MAMS rose to the top of our shortlist because of the features and the price point, but also because of the integrations it offered with our other selected vendors.

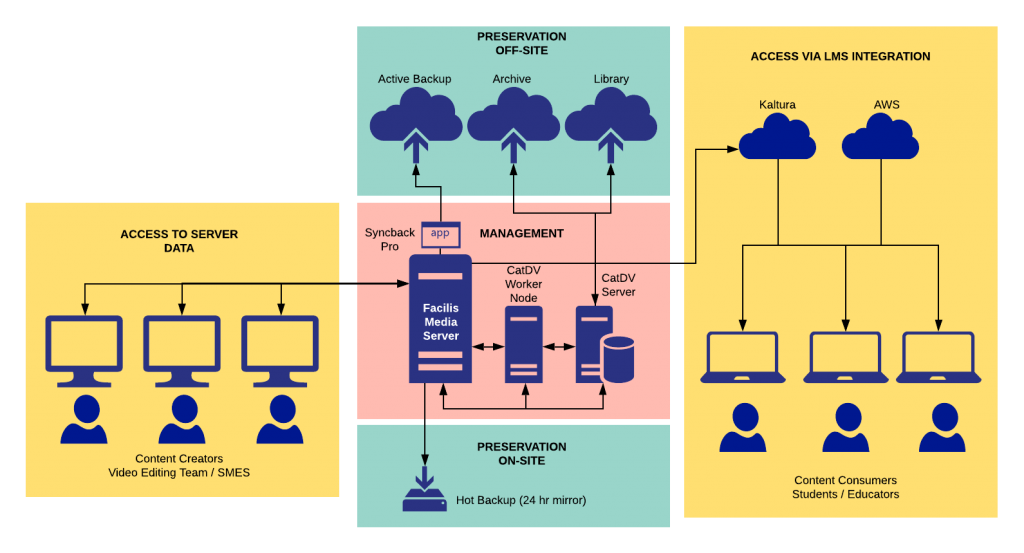

After much thought (and a lot of white board sessions), I established the following architecture for our data flow:

The system architecture that supports the media services has a lot of moving parts that need constant upkeep. Read below to get a better idea of how each piece contributes to the whole.

Facilis Media Server – Each project is housed within its own volume with unique permissions so that only users actively working on a project have rw access to that project. Project volumes are created with a default size of 2TB, with an occasional project needing to be resized if space gets tight.

SyncbackPro – This application on the Facilis server pushes mirror backups to our Backblaze Active Backup bucket. Once a course begins post-production and the unit folders are built out, the project is added to the backup queue in SyncbackPro to mirror to offsite storage. In the backup script, I eliminate some of the unnecessary files and directories that wouldn’t be a loss in the event of a catastrophic event. In these active data backups, we save the meat of what’s being created, but trim the fat so that we’re not paying to store unnecessary files.

Active Backup – This storage bucket in Backblaze holds the build files and assets of courses currently in the post-production phase, with unnecessary files and directories eliminated from the mirror, and also the files within the Asset Library volume. When a course is archived and removed from the server, the backup files are naturally deleted after 30 days, on the command of the workflow rules I established to reduce redundant data in the different buckets.

Archive Storage – Another bucket in our Backblaze storage, this location houses the files pushed up from CatDV when a course volume has been closed and can go “on the shelf.” Media is visible and discoverable in CatDV by proxy and stored metadata, and is easily recovered through the DAMS.

Library Storage – This dedicated bucket is the off-site backup location of every published video created by the team, embedded with rights metadata in case anything gets released in the wild. In a similar workflow to the Archive storage, the Library storage data move happens with a CatDV command.

Hot Backup – This server is managed by our on-site ITS team and houses a revolving 24 hour mirror of the media server. It’s come in handy more than once, but if a file that’s been gone longer than 24 hours needs to be recovered, I turn to the Active Backup off-site.

CatDV – Our DAM/MAM database. Assets are ingested in a variety of ways depending on the lifecycle stage when they’re ingested, and much of the metadata is automatically captured upon ingest from the media path.

The production groups include:

The metadata at use in the CatDV server is a hybrid of existing schemas and our own custom schemas. Read more about the Metadata choices I made. CatDV is the trigger that pushes and pulls media from our off-site storage buckets. Once a course is closed and cataloged, the data is pushed to the off-site storage bucket and the volume is removed from the Facilis server.

CatDV Worker Node Server – This is where the magic happens. Custom workflows are programmed into the worker node server that use the metadata as handles to move the data through processes or to different locations.